From Idea to Reality: Building bolt.diy for AI-Powered Web Apps

Project Genesis

Unleashing Creativity with bolt.diy: My Journey into AI-Powered Web Development

From Idea to Implementation

Initial Research and Planning

Technical Decisions and Their Rationale

Alternative Approaches Considered

Key Insights That Shaped the Project

Under the Hood

Technical Deep-Dive into bolt.diy

1. Architecture Decisions

Modular Design

API Key Management

2. Key Technologies Used

Frontend

- React: The frontend is built using React, allowing for a dynamic and responsive user interface. React’s component-based architecture facilitates the modular design of the application.

- Vercel AI SDK: This SDK is pivotal for integrating various LLMs, providing a consistent API for model interactions.

Backend

- Node.js: The application runs on Node.js, which is essential for handling asynchronous operations and managing API requests efficiently.

- Docker: Docker is used for containerization, allowing developers to run the application in isolated environments. This is particularly useful for ensuring consistency across different development setups.

State Management

- Redux: For managing application state, Redux is employed, enabling predictable state transitions and easier debugging.

3. Interesting Implementation Details

Dynamic Model Selection

const handleModelChange = (selectedModel) => {

setCurrentModel(selectedModel);

// Update API endpoint based on selected model

setApiEndpoint(getApiEndpoint(selectedModel));

};Integrated Terminal

const handleCommandExecution = async (command) => {

const output = await executeCommand(command);

setTerminalOutput(prevOutput => [...prevOutput, output]);

};Image Attachment for Prompts

const handleImageUpload = (event) => {

const file = event.target.files[0];

if (file) {

const reader = new FileReader();

reader.onloadend = () => {

setImageData(reader.result);

};

reader.readAsDataURL(file);

}

};4. Technical Challenges Overcome

API Integration Complexity

Performance Optimization

const memoizedValue = useMemo(() => computeExpensiveValue(input), [input]);User Experience Enhancements

Conclusion

Lessons from the Trenches

Key Technical Lessons Learned

-

Modular Architecture: The ability to integrate multiple LLMs through an extensible architecture has proven to be a significant advantage. This modularity allows for easy updates and the addition of new models without major overhauls to the codebase.

-

Community Contributions: Engaging the community for feature requests and contributions has accelerated development. The use of a public roadmap and a clear list of requested additions has helped prioritize tasks and foster collaboration.

-

Docker for Isolation: Utilizing Docker for deployment has simplified the setup process for users who may not be familiar with the intricacies of local development environments. It provides a consistent environment that reduces “it works on my machine” issues.

-

API Key Management: Implementing a straightforward UI for API key management has improved user experience. It allows users to easily configure their settings without diving into the code.

What Worked Well

-

User-Friendly Documentation: The README provides clear, step-by-step instructions for setup and usage, which has likely reduced barriers for new users. Including visuals for configuration steps enhances understanding.

-

Feature Requests and Tracking: The structured approach to tracking requested features and their statuses (completed vs. in progress) has kept the community engaged and informed about the project’s direction.

-

Integrated Terminal: The inclusion of an integrated terminal for viewing LLM command outputs has been a valuable feature for debugging and understanding the model’s behavior.

-

Cross-Provider Support: Supporting multiple LLM providers has attracted a diverse user base, allowing users to choose the best model for their specific needs.

What You’d Do Differently

-

Prioritize High-Priority Features: While many features have been implemented, focusing more on high-priority items (like file locking and better prompting for smaller LLMs) could enhance stability and usability sooner.

-

Enhanced Testing: Implementing a more robust testing framework earlier in the development process could help catch bugs and issues before they reach users, improving overall reliability.

-

More Comprehensive Error Handling: Developing better error detection and handling mechanisms could improve user experience, especially for those less familiar with coding. For example, providing suggestions for fixing detected terminal errors could be beneficial.

-

Streamlined Contribution Process: Simplifying the contribution process for new developers could encourage more community involvement. This might include clearer guidelines or templates for submitting issues and pull requests.

Advice for Others

-

Engage Your Community: Actively involve your user base in the development process. Use platforms like GitHub for feature requests and discussions to foster a sense of ownership and collaboration.

-

Invest in Documentation: Comprehensive and user-friendly documentation is crucial. It not only helps users get started but also reduces the number of support requests and issues.

-

Iterate Based on Feedback: Regularly solicit feedback from users and be willing to iterate on features based on their experiences. This can lead to a more user-centered product.

-

Plan for Scalability: As your project grows, ensure that your architecture can handle increased complexity. Consider how new features will integrate with existing ones and plan for future scalability from the outset.

-

Focus on User Experience: Always prioritize user experience in your design and development decisions. A smooth, intuitive interface can significantly enhance user satisfaction and retention.

What’s Next?

Conclusion

Project Development Analytics

timeline gant

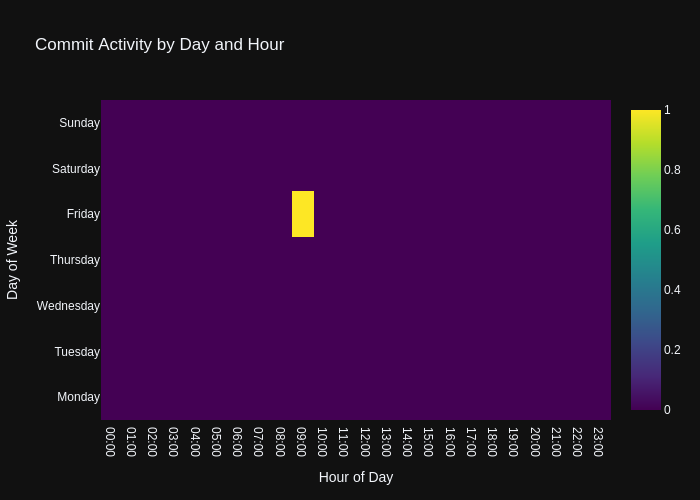

Commit Activity Heatmap

Contributor Network

Commit Activity Patterns

Code Frequency

- Repository URL: https://github.com/wanghaisheng/bolt.diy

- Stars: 0

- Forks: 0

编辑整理: Heisenberg 更新日期:2025 年 1 月 6 日